Introduction to Riffusion

Artificial intelligence is rapidly transforming how we create and experience music. From generating lyrics to composing full tracks, tools are opening up new possibilities for artists, hobbyists, and technologists alike. One such tool leading this wave is Riffusion — an innovative system that turns text prompts into music in real time. Built on top of Stable Diffusion, a popular text-to-image model, Riffusion reimagines the process of song creation by generating spectrogram images that can be converted into playable audio. Whether you're curious about auto-generated sound or eager to experiment with new creative workflows, Riffusion offers an exciting glimpse into the future of song production.

What Is Riffusion

Riffusion is an innovative model that generates music from textual prompts, developed by Hayk Martiros and Seth Forsgren as an experimental extension of Stable Diffusion. By reimagining audio generation through a visual lens, Riffusion converts sound into spectrograms—graphical representations of audio frequencies—which the model then processes and manipulates using diffusion techniques to create new musical compositions. Unlike traditional AI music models that directly synthesize audio, Riffusion’s unique approach bridges image generation and sound, positioning it as a groundbreaking hybrid in the AI music generation landscape. Its ability to interpret and transform text prompts into dynamic, genre-flexible song highlights its potential to democratize creative expression, offering artists and enthusiasts a novel tool to experiment with melodies, rhythms, and textures. This fusion of visual and auditory creativity underscores Riffusion’s transformative impact on redefining how song can be composed and imagined in the digital age.

How Riffusion Works

Riffusion is a unique system that generates music not by synthesizing sound directly, but by producing spectrogram images — visual representations of audio frequencies over time. These images are then converted into actual audio clips. At the core of Riffusion is Stable Diffusion, a popular text-to-image model that was originally designed for generating realistic images from text prompts. The creators of Riffusion — Seth Forsgren and Hayk Martiros — adapted this model to synthesize spectrograms instead of pictures, opening the door to a new kind of sound creation.

Here’s how it works in simple terms:

- Text Prompt Input: Users type in a prompt, such as “funky jazz with saxophone” or “ambient techno with a deep bassline.”

- Spectrogram Generation: Riffusion uses Stable Diffusion to create a spectrogram image that visually encodes what that prompt might sound like.

- Audio Reconstruction: The spectrogram is passed through an inverse Short-Time Fourier Transform (STFT) algorithm, which reconstructs the waveform — turning the image into sound.

- Real-Time Output: The system delivers results almost instantly, making it feel like you’re composing music on the fly.

One standout feature of Riffusion is its prompt interpolation capability. This allows the model to blend two or more text prompts over time, creating smooth transitions between genres or instruments — like going from "classical piano" to "lo-fi hip hop beats" in a single track.

By bridging image synthesis and audio processing, Riffusion introduces a completely new way to think about music composition — one that’s visual, interactive, and deeply experimental.

Riffusion Benefits and Limitations

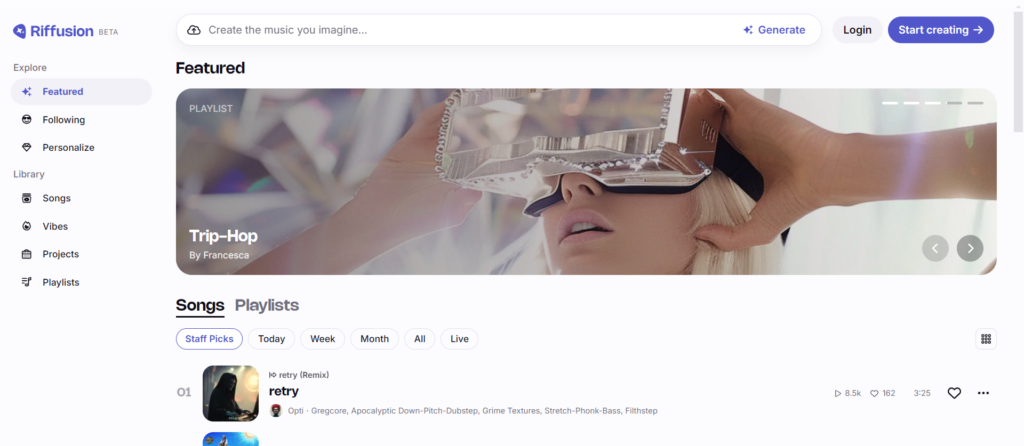

Key Features of Riffusion

Riffusion provides users with a unique song creation experience with its high flexibility and openness: through "custom prompt sound design", users can directly enter text descriptions (such as "jazz drum beats fused with electronic sound effects") to generate personalized song clips, and use latent space interpolation technology to achieve seamless transitions between different styles of audio; its "interactive web application interface" supports real-time editing of prompt words, adjusting parameters, and instantly auditioning the effects, which greatly reduces the technical threshold for song creation. Thanks to the optimized model architecture, Riffusion can achieve "real-time song generation", produce high-fidelity audio within seconds, and ensure clear and smooth sound quality through accurate restoration of Mel spectrograms and inverse short-time Fourier transforms (ISTFT). In addition, as an "open source project", its code base is deeply integrated with platforms such as Hugging Face, and developers can freely expand model functions or embed them into third-party applications, further promoting the popularization of song technology and the construction of an innovative ecosystem.

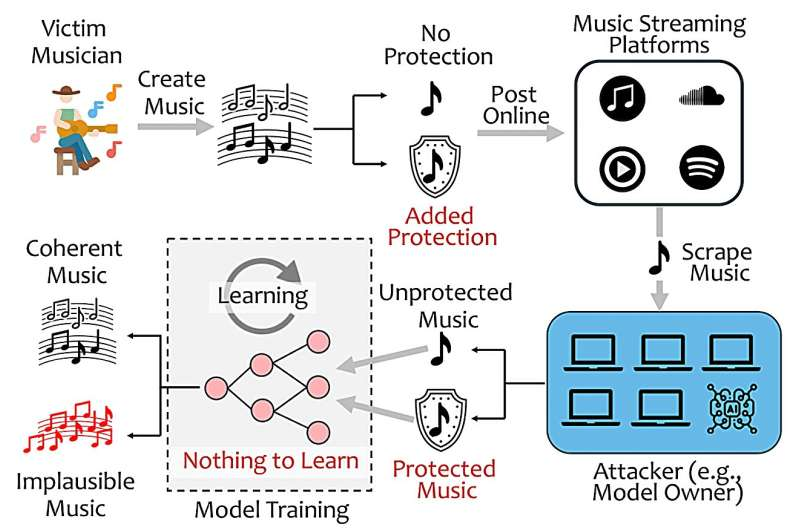

Limitations of Riffusion

Riffusion's spectrogram-based approach introduces significant constraints including resolution limitations affecting audio fidelity, frequency range restrictions, and time-frequency tradeoffs, while technical implementation challenges encompass high computational resource requirements, real-time generation limitations, and scalability issues for longer compositions. The model struggles with musical structure and coherence, exhibiting difficulties in maintaining thematic consistency, limited control over song progression, and challenges with harmonic development. Control granularity issues manifest through imprecise instrument isolation, difficulties specifying exact musical elements, and limited fine-tuning capabilities. The system demonstrates uneven performance across musical genres with bias toward its training data distribution and struggles with niche styles. Integration challenges with standard song production workflows include compatibility issues with DAW software, limited export options, and post-processing difficulties. Finally, Riffusion exhibits dependencies on its training data that create quality constraints, cultural and stylistic biases, and gaps in representing certain musical traditions.

What Are the Alternative Products of Riffusion?

Although Riffusion has opened up a new path for composition by generating song through visual spectrograms, its technical bottlenecks, such as limited generation time and phase distortion, still restrict its wider application. To this end, the developer community and scientific research teams have proposed a variety of alternatives. These tools have been optimized in terms of audio length extension, multi-track control or real-time interactivity, providing supplementary options for song creation in different scenarios. The following will discuss Riffusion's alternative products and their differentiated features.

Comparison of AI Music Generation Tools

| Tool Name | Core Function | Output Type | Best Use Cases | Vocal Support | Open Source | Commercial Use |

| Riffusion | Text-to-spectrogram song generation using Stable Diffusion | Audio clips (loopable) | Creative song exploration, live sound experiments | ❌ No | ✅ Yes | ⚠️ Attribution recommended |

| Rmusic | Creates high-quality song and full-length songs online. | Full tracks with vocals | Original song creation, Video soundtracks, ads, commercial content | ✅ Yes | ❌ No | ✅ Allowed |

| Suno AI | Full-song generation (vocals + lyrics + song) | Full song tracks | Songwriting, viral content, demo creation | ✅ Yes | ❌ No | ✅ Allowed (via license) |

| Udio | Realistic AI vocals with songwriting capability | Full song tracks (with multi-style vocals) | Song production, TikTok hits, audio storytelling | ✅ Yes | ❌ No | ✅ Allowed (with Pro plan) |

| Soundraw | AI-generated royalty-free instrumental song | Instrumental audio | Background music for video, ads, podcasts | ❌ No | ❌ No | ✅ Allowed (via subscription) |

Comparison of AI Music Generation Tools

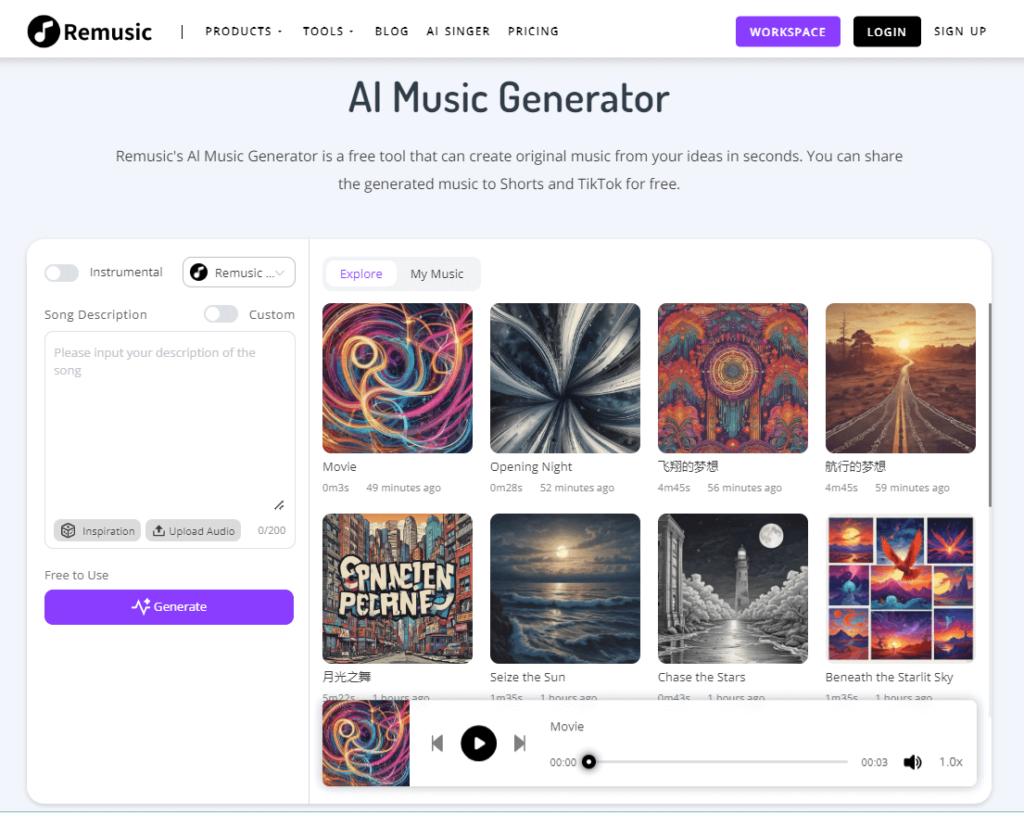

1. Rmusic

For those seeking a more versatile song tool, Remusic offers a well-rounded alternative. It provides greater control over composition elements, allowing users to fine-tune melodies, structure, and instrumentation. AI Music Generator is designed to generate more dynamic and unique pieces, reducing repetitiveness. Additionally, Remusic supports a wider range of musical genres, making it a better fit for artists with diverse creative needs.

If you’re looking for an AI music generator that combines flexibility, variety, and creative control, Remusic is worth exploring as an alternative to Riffusion.

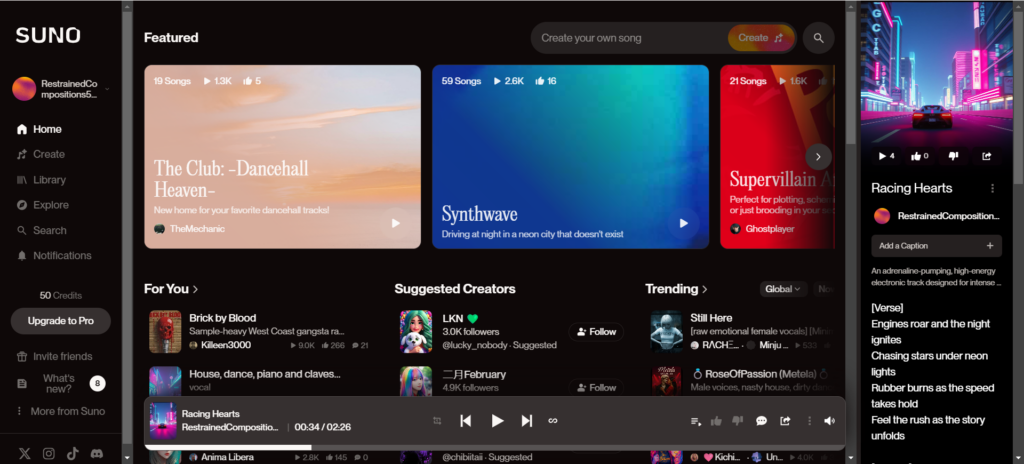

2. Suno

As one of Riffusion's alternative products, Suno focuses on generating complete songs including vocals, melodies and lyrics. Users only need to enter text prompts to automatically generate a complete musical work, especially good at integrating pop, rock, electronic and other styles. Its core highlight is the high-fidelity vocal synthesis technology, which can generate natural and smooth singing passages, greatly simplifying the creation process of song prototypes or demos.

3. Udio

Udio is an AI Song Generator with user experience at its core. Its advantage lies in its ability to efficiently produce high-quality, multi-style song works, and its intuitive and friendly operation interface supports all types of users to quickly get started. However, the current public information on the platform is relatively limited, and the specific parameters and payment model of advanced functions have not yet been clarified. It may be more suitable for basic users who pursue efficient creation. This tool is mainly aimed at content creators and song lovers who need to quickly generate high-quality song materials, especially for user groups who value ease of operation.

4. Soundraw

Soundraw is an AI Music Generator that focuses on creative efficiency. Its advantage is that it supports users to generate personalized tracks by customizing emotions, styles and lengths. The intuitive operation interface is especially suitable for rapid production scenarios. However, the free version has limited functions, and advanced music generation capabilities must be unlocked through paid subscriptions. In addition, the music created by tool may not be as complex and emotional as human works.

FAQs about Riffusion

Q1: What is Riffusion?

A1: Riffusion uses artificial intelligence system to convert text prompts into spectrograms, which are then transformed into playable audio. It blends deep learning with real-time music generation.

Q2: How does Riffusion generate music?

A2: Yes, Riffusion offers a free version for users to explore its core features, though advanced options may require a subscription or purchase.

Q3: Is Riffusion free to use?

A3: Yes, Riffusion offers a free version for users to explore its core features, though advanced options may require a subscription or purchase.

Q4: Can I use Riffusion for commercial projects?

A4: It depends on the license. Check Riffusion’s terms of use, as commercial usage might be limited or require permission for certain outputs.

Q5:What are the system requirements for running Riffusion?

A5: Riffusion runs in your browser, so no powerful hardware is needed. A stable internet connection and a modern web browser (like Chrome or Firefox) are usually enough.

Conclusion

Riffusion is redefining the way music is created. Whether you are a novice or an experienced producer, you can generate a unique melody by just entering a sentence. This is not only a creative tool, but also a new way of expression.

Try Riffusion now and generate your music by yourself!